When you have no clue what LLMs actually are and what we mean by parameters but you still tweet —

13

8

184

Replies

@serious_mehta

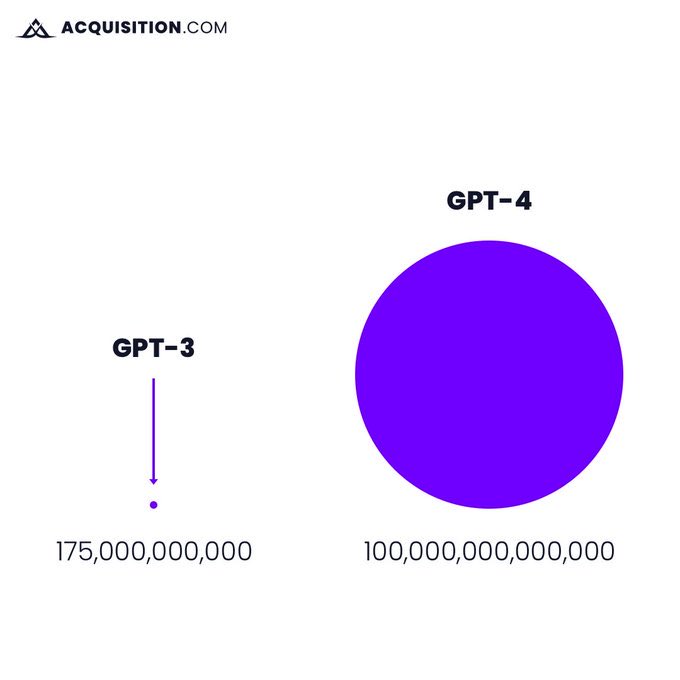

If we increase the parameters then GPT-7 might have more knowledge than all humans combined.

Scientists won't have to reserch anything, they can just ask GPT 7 😂

1

0

7

@serious_mehta

I will tell you one thing though: the math behind all this is high school level algebra at best.

0

0

0

@serious_mehta

Besides accuracy of predictions in a test dataset how could a model’s “knowledge” be evaluated? Is accuracy even a good proxy for “knowledge”?

In general, does model performance positively correlate to dataset size and/or model complexity (# of Params, layers, etc.)?

0

0

1