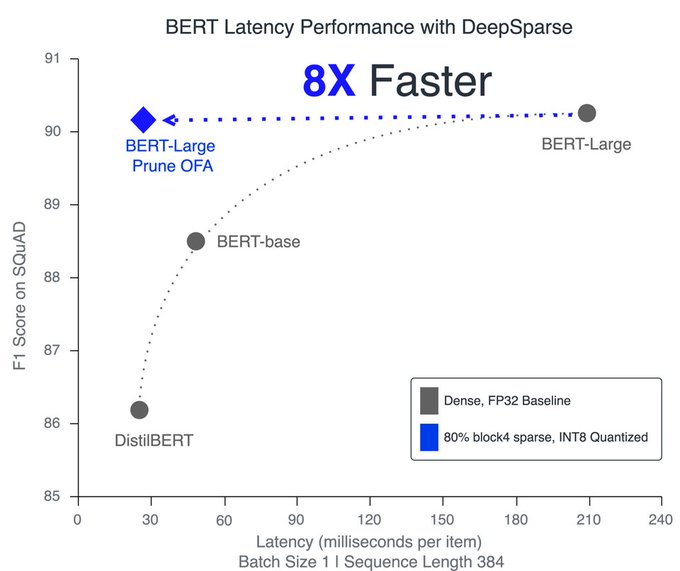

BERT-Large (345M parameters) is now faster than the much smaller DistilBERT (66M parameters) with accuracy of the larger BERT-Large!

It delivers 8x latency speedup on commodity CPUs 🚀

🙏

@ZafrirOfir

,

@guybd35

,

@markurtz_

& teams for fantastic collab!

0

8

37