Can we combine integer linear programming with exemplar selection to improve In-Context Learning?

Yes! All you need is to optimize your Knapsack 🎒

The paper by

@TongletJ

et al. on

#SEER

was just accepted to

#EMNLP2023

– learn more in this 🧵 (1/8)

📰

1

1

14

Replies

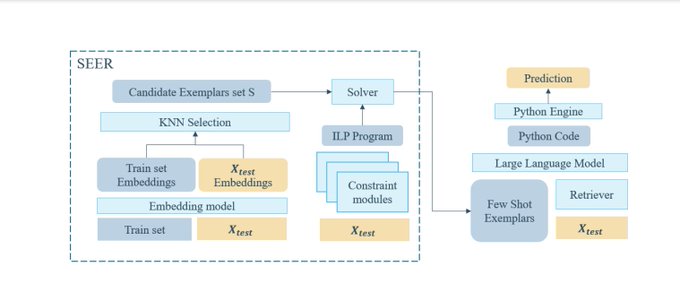

It was observed that the performance of ICL depends heavily on the selection of the exemplars.

@TongletJ

et al. show how this combinatorial optimization problem can be formulated as a Knapsack Integer Linear program and optimized efficiently with deterministic solvers. (2/🧵)

1

0

2

@TongletJ

The Knapsack consists of an objective function, a capacity constraint and optional additional constraints. (3/🧵)

1

0

2

@TongletJ

In their

#EMNLP2023

paper the authors use a capacity constraint to control the size in tokens of the prompt and diversity constraints to favor the selection of exemplars – sharing the same reasoning properties as the test problem. (4/🧵)

1

0

3

@TongletJ

They propose

#SEER

, a method to automatically generate a Knapsack program for

#HybridQA

problems. It achieves superior performance to exemplar selection baselines on the FinQA and TAT-QA datasets. (5/🧵)

#EMNLP2023

1

0

4

@TongletJ

Tokens are the main unit price for commercial LLMs. Thanks to capacity constraints,

#SEER

directly optimizes the prompt size to meet restricted token budgets. (6/🧵)

#EMNLP2023

1

0

3

@TongletJ

If you're interested in our research: We provide open access to our code and results:

➡️

(7/🧵)

#EMNLP2023

1

0

4

@TongletJ

And consider following the authors

@TongletJ

(

@UKPLab

/

@KU_Leuven

), Manon Reusens (

@ManonReusens

), Philipp Borchert (

@IESEG

/

@KU_Leuven

) and

@BartBaesens

(

@KU_Leuven

/

@unisouthampton

), if you are interested in more information or an exchange of ideas. (8/8)

See you in 🇸🇬!

#EMNLP2023

0

1

7