@_jasonwei

If you're interested in our research: We provide open access to our code and results, including model-generated responses so researchers can build on these results:

Code ➡️

Data ➡️

(5/)

1

2

37

Replies

Are Emergent Abilities in Large Language Models just In-Context Learning?

Spoiler: YES 🤯

Through a series of over 1,000 experiments, we provide compelling evidence:

Our results allay safety concerns regarding latent hazardous abilities.

A🧵👇

#NLProc

17

185

731

It was previously reported by

@_jasonwei

et al. that Large Language Models have Emergent Abilities.

The »unpredictable emergence« of such a large number of abilities, it has been claimed, is indicative of the possibility that there are latent hazardous abilities. (2/)

1

1

29

@_jasonwei

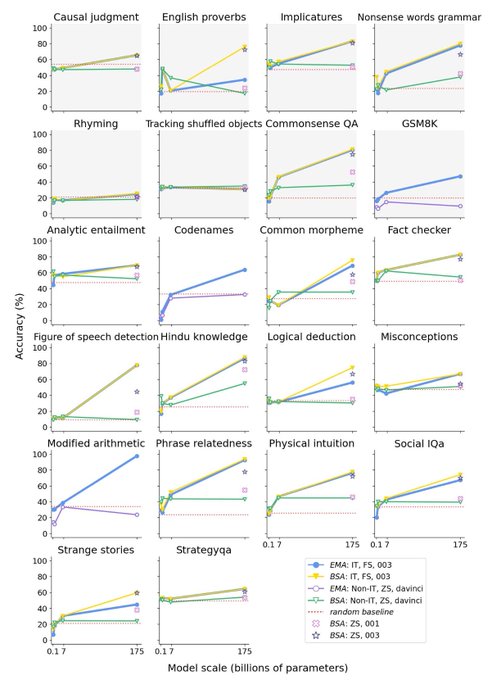

We perform the first set of experiments to test models without in-context learning and show that in this setting only 2 of the 22 tasks originally reported to be emergent are »truly emergent«.

Of these 2, one tests linguistic skills and the other tests memory.

No »Reasoning« 🤔

1

2

37

@_jasonwei

Does Instruction Tuning (including on Code) lead to »reasoning«?

Turns out: No 🚫

Without in-context learning the tasks solvable by T5 770M and GPT-3 175B are comparable! 🤯😯 (4/)

#NLProc

1

1

44

@_jasonwei

And consider following our authors Sheng Lu, Irina Bigoulaeva (

@IBigoulaeva

), Rachneet Sachdeva (

@rachneet4

), Harish Tayyar Madabushi (

@harish

) and Iryna Gurevych (

@IGurevych

) at

@BathNLP

and the

@UKPLab

, if you are interested in more information or an exchange of ideas. (6/6)

0

2

33